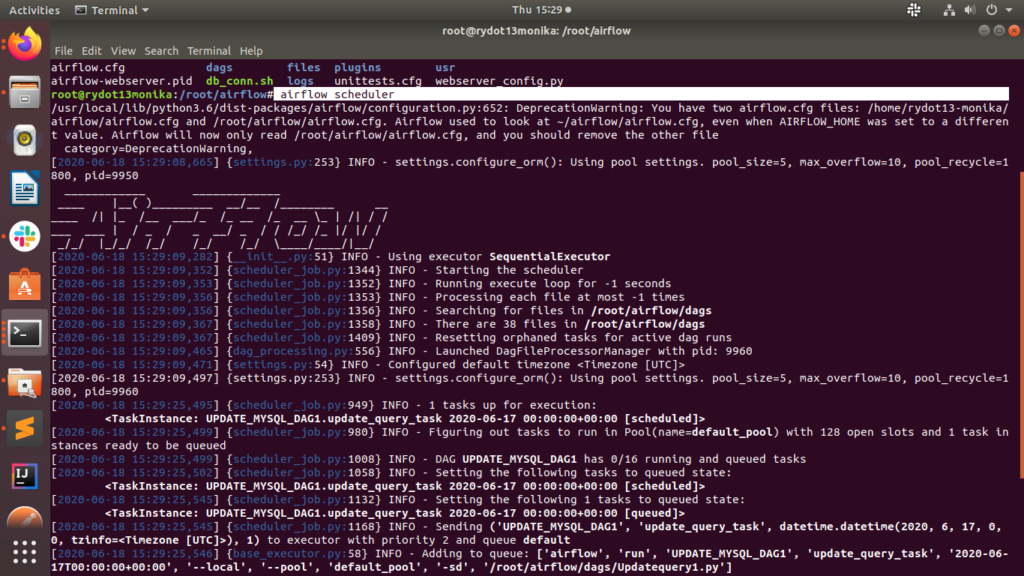

I did find a solution that works for me on Ubuntu 18.04 and 18. Sql_alchemy_conn = postgresql+psycopg2://:5432/airflowĪpi_client = _clientĬelery_app_name = airflow.executors. 1 I know Im digging up a dated post, but I too was trying to figure out why I could not get the scheduler to run automatically when the server is running. In general, we see this message when the environment doesn’t have resources available to execute a DAG. I also tried to use the worker container separately - it did not help. The DAGs list may not update, and new tasks will not be scheduled. The flower shows what the worker itself created, but the worker container doesnt show a single line of logs. When I try to schedule the jobs, scheduler is able to pick it up and queue the jobs which I could see on the UI but tasks are not running. I tried to do celeryresultbackend brokerurl redis://redis:6379/1 but to no avail. I don't even know what these processes were, as I can't really see them after they are killed.I am new to airflow and trying to setup airflow to run ETL pipelines. from airflow import settings from airflow.models import dagrun from import DagModel from import DagRun from import TaskInstance from import DagRunType from import State from sqlalchemy import and, func, not, or, tuple from import UtcDateTime, nullsfirst. As in: airflow trigger_dag DAG_NAMEĪfter waiting for it to finish killing whatever processes he is killing, he starts executing all of the tasks properly.

The status of scheduler depends on when the latest scheduler heartbeat was received. Last heartbeat was received 1 day ago.The DAGs list may not update, and new tasks. Airflow message: 'The scheduler does not appear to be running.

#AIRFLOW SCHEDULER NOT STARTING UPGRADE#

So all new dags you create are paused from the start. Good morning, After upgrade the Google Composer to the version 1.18 and Apache Airflow to the version 1.10.15 (using the auto upgrade from the composer) the scheduler does not seem to be able to start.

(this fixed my initial issue) 2) In your airflow.cfg file dagsarepausedatcreation True, is the default. When I tried to start my webserver it couldn't able to start. I have configured airflow with mysql metadb with local executer. 0 What happened Im running airflow in Kubernetes. I have installed airflow via github source.

#AIRFLOW SCHEDULER NOT STARTING INSTALL#

Off means that the dag is paused, so On will allow the scheduler to pick it up and complete the dag. In this case, it does not matter if you installed Airflow in a virtual environment, system wide. Steps to solve the issue: Install guinicorn 19.9.0. The status of metadatabase depends on whether a valid connection can be initiated with the database. 1) In the Airflow UI toggle the button left of the dag from 'Off' to 'On'. Stop Airflow servers (webserver and scheduler): pkill -f 'airflow scheduler' pkill -f 'airflow webserver' Now use again ps -aux grep airflow to check if they are really shut down. The status of each component can be either healthy or unhealthy. First state: Task is not ready for retry yet but will be retried automatically. It seems to be happening only after I'm triggering a large number (>100) of DAGs at about the same time using external triggering. S 12:19 0:00 airflow scheduler - DagFileProcessorManager That means that Airflow scheduler is running. We developed k8s-spark-scheduler to solve the two main problems we experienced when running Spark on Kubernetes in our production environments: unreliable. I'm using a LocalExecutor with a PostgreSQL backend DB. These are the logs the scheduler prints: WARNING - Killing PID 42177 I'm testing the use of Airflow, and after triggering a (seemingly) large number of DAGs at the same time, it seems to just fail to schedule anything and starts killing processes.

0 kommentar(er)

0 kommentar(er)